Dissertation Project Brief

An Interaction Design Based on LLM Association for User Attention and Gesture Operations

Origin of the IDEA

New Interactions in AR/VR

- The interaction should be intuitive, have a low learning curve, and be easy to transfer.

- New interactions don't necessarily have to replace existing traditional ones but can complement new scenarios.

History of Interaction

Traditional Human-Computer Interaction (focusing only on the era when computers became mainstream, forget about ENIAC)

- Keyboard Input - Traditional Command Line Interface

- Mouse Control - Graphical User Interface (including tablets, game controllers, etc., which also rely on a graphical interface)

- Touch Screen - Graphical Interface, with smaller devices making finger tapping more convenient

- Voice Input - Voice Assistants, devices supporting voice input, and development in AI (NLP)

In AR/VR (traditional terms), the metaverse (proposed by Meta), and spatial computing (recently released Apple Vision Pro), the essence is very similar.

Existing AR/VR Device Input and Interaction Methods:

- Headset + Controllers, where the controllers are essentially a variant of the mouse and game controllers, with cursor and click operations largely similar to traditional mouse operations on PCs: clicking, long press and drag, etc.

Apple Vision Pro offers a more diverse range of interactions:

- Virtual Keyboard (typing in the air), Voice Input, Eye Focus (expanding display for more content)

- Eye Tracking quickly provides suggestions based on context, allowing device operation based on these suggestions.

- etc. ← Click here

My Idea — An Interaction Design Based on LLM Association for User Attention and Gesture Operations

Examples

- Notice a document in a work setting, association: "Scan to PDF" operation.

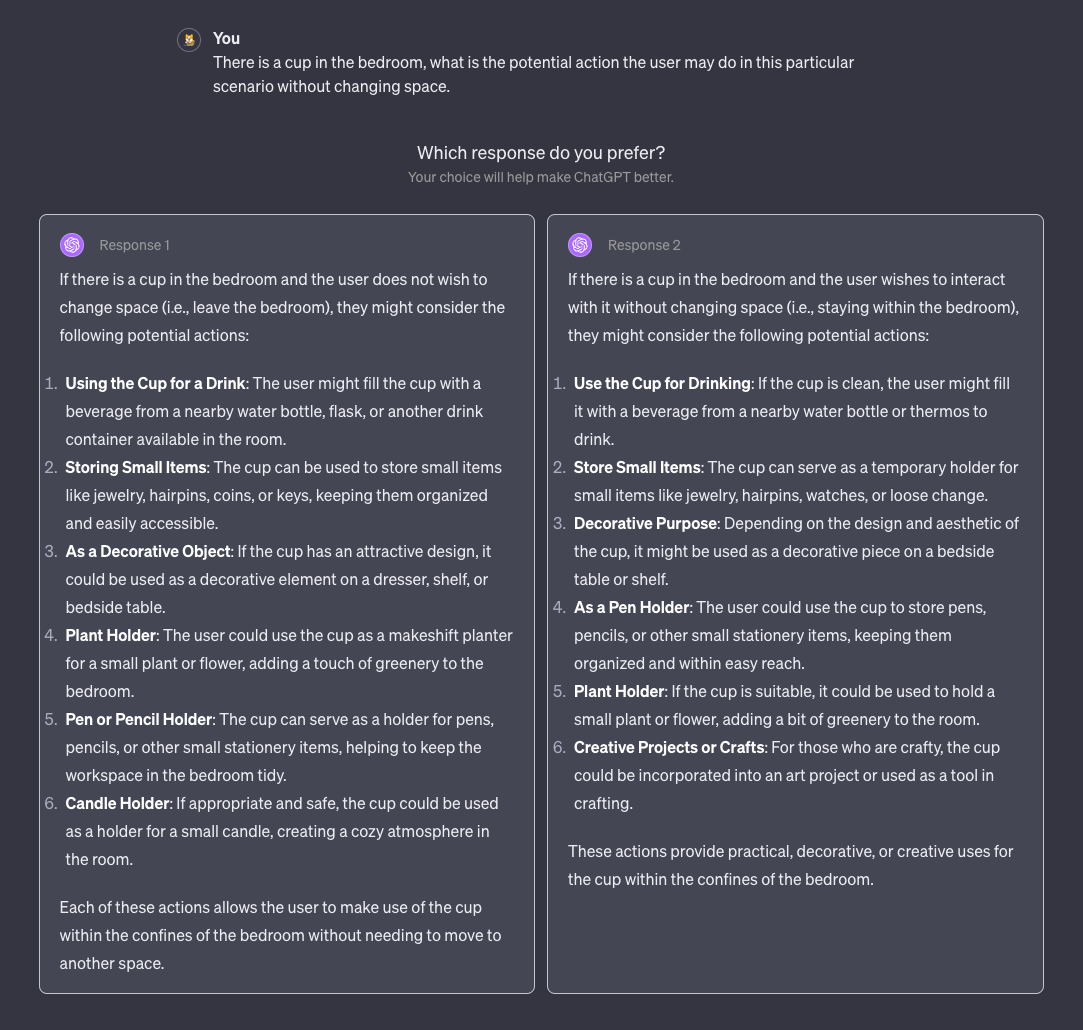

- See an empty tea cup in the bedroom, association:

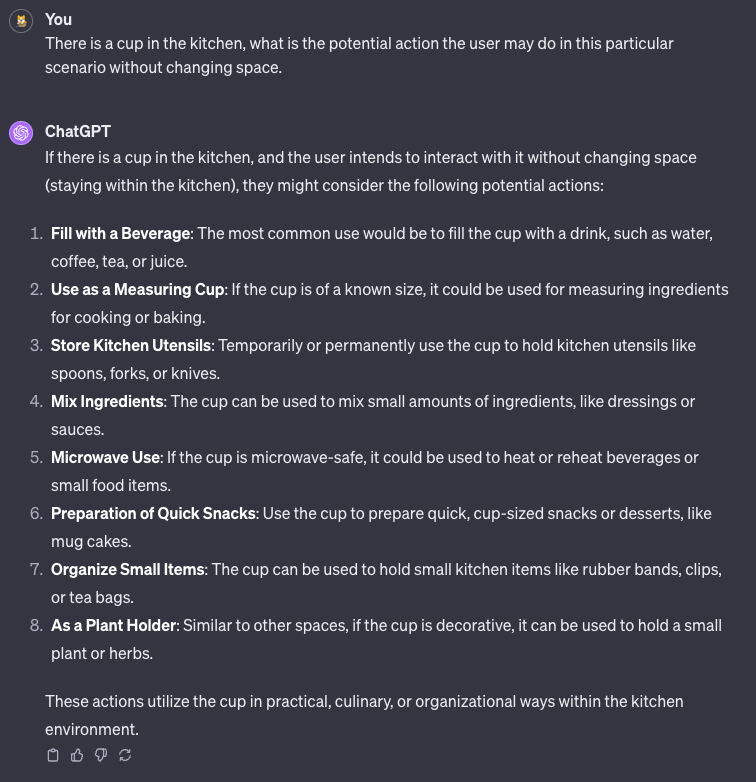

- See a tea cup in the kitchen, association:

From the overall product flow perspective

It can be divided into three parts:

- Detecting user attention or points of interest. Current eye-tracking technology effectively solves this problem.

- Semantic segmentation based on the scene, matching the user's gazing point. Recognizing the current environment and using semantic segmentation and scene classification results as prompts. Combine with LLM models to associate objects with potential related operations (hoped to be a research direction during my Ph.D. period).

- Adequately display the associative information with simple gesture operations (main content of this dissertation project).

Dissertation Plan: Only design the final interface.

Main Research Components of the Dissertation

- Direct text input to GPT-4, returning relevant information, optimizing prompts, or using basic NLP algorithms to extract words related to specific object operations for display.

- Considering the matching of the gazing point to objects and potential gesture operations, design a pop-up menu.

If time permits, consider testing existing pre-trained semantic segmentation algorithms (e.g., YOLOv8) in a specific scenario. Input an image, output semantic segmentation, use a cursor as a substitute for the gazing point, capture the semantic content pointed at by the cursor, and format it as input for the GPT-4 interface, obtaining text output from GPT-4.

Given time constraints, avoid expanding the above program to combine interface design to display pop-up menus and other content (involving front-end and back-end separate development, and the sophisticated animation effects demand high front-end development skills).